Deep learning models rely on certain features in an image to make decisions. These are aspects like the colour of

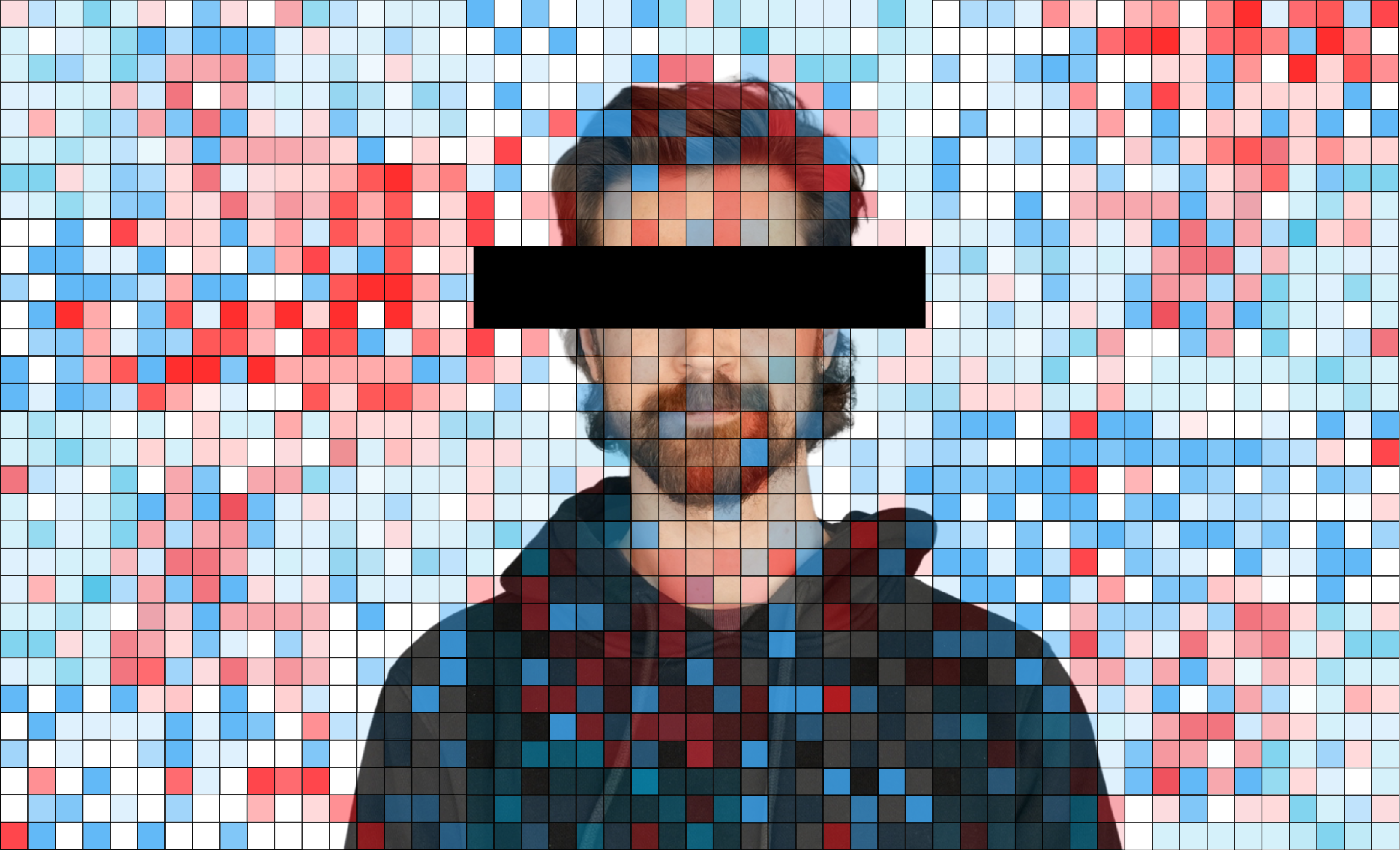

Occlusion: Permutation-based Saliency Maps for Computer Vision

Deep learning models rely on certain features in an image to make decisions. These are aspects like the colour of

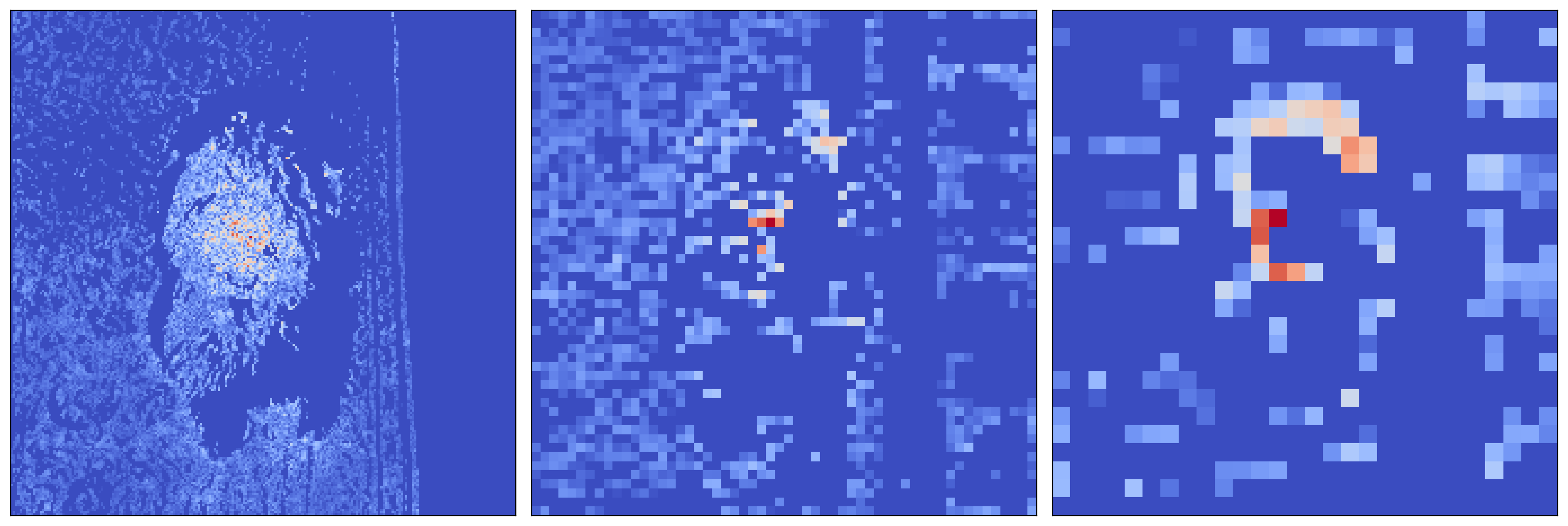

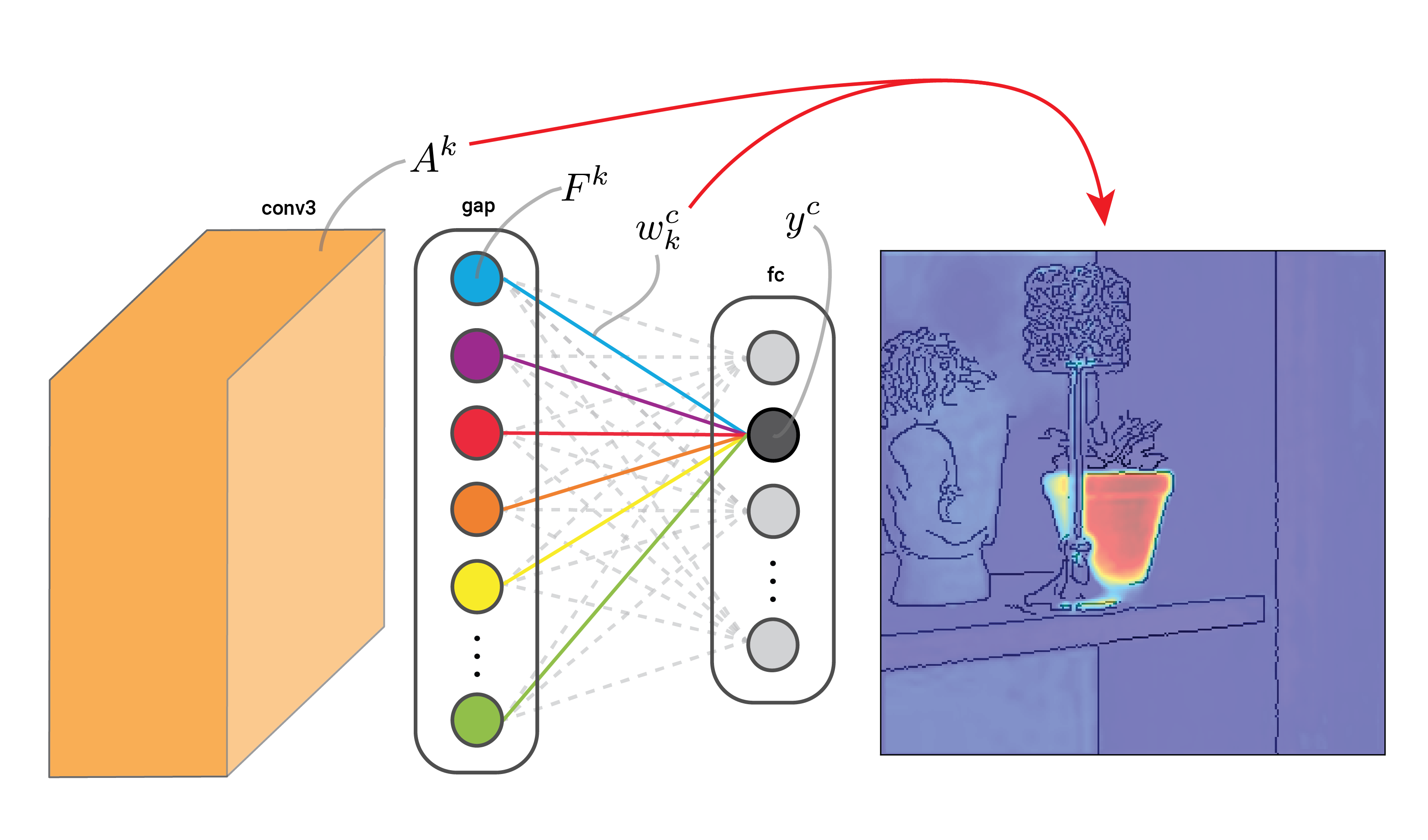

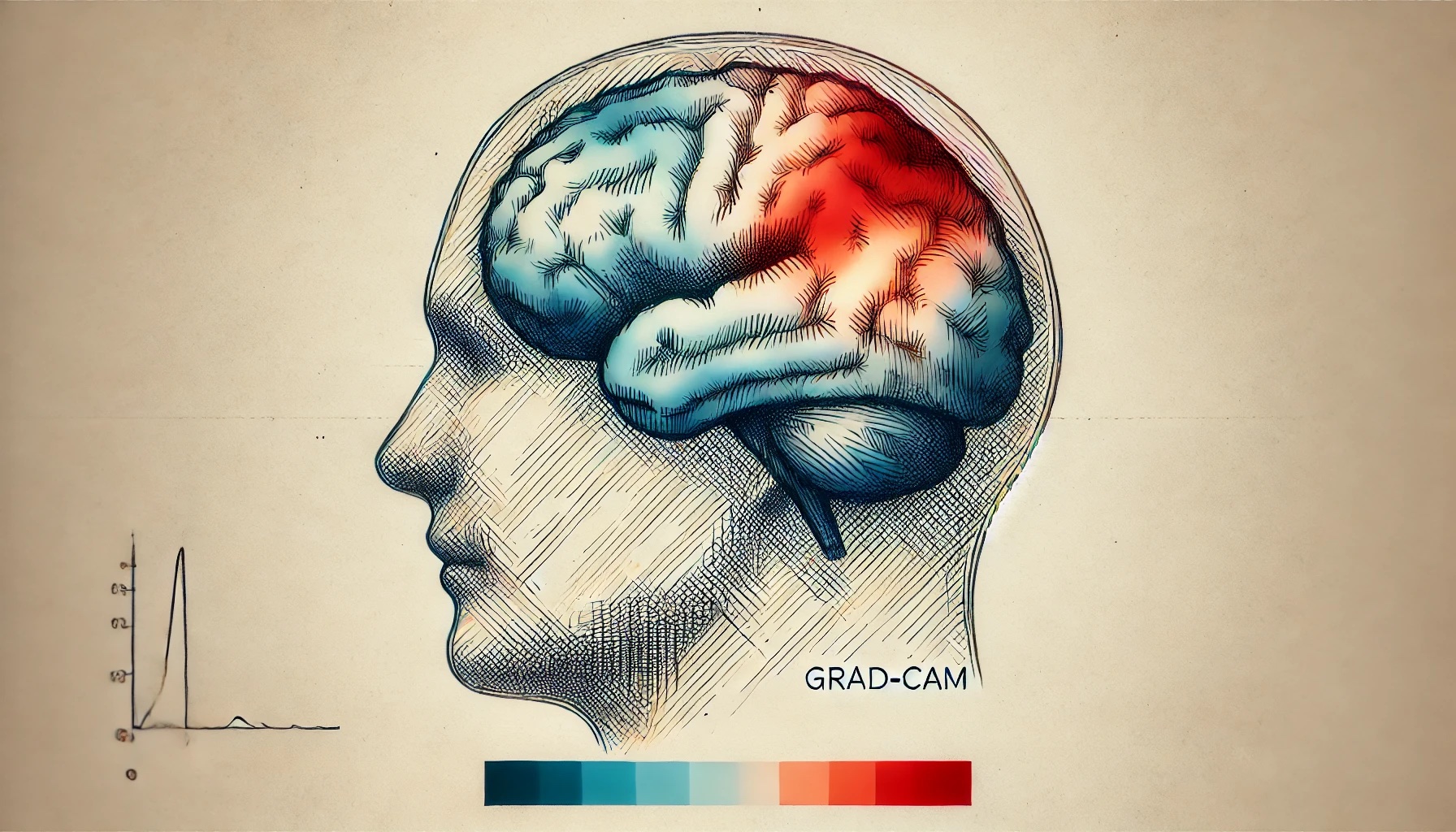

Convolutional neural networks (CNNs) make decisions using complex feature hierarchies. It is difficult to unveil these using methods like occlusion,

Interpretability by design is usually a conscientious effort. Researchers will think of new architectures or adaptions to existing ones that

An X-ray AI system displays the result—there is a tumour. Thankfully, it is benign. However, the doctor is hesitant to

Welcome to Explainable AI for Computer Vision — a free course that covers the theory and Python code for XAI