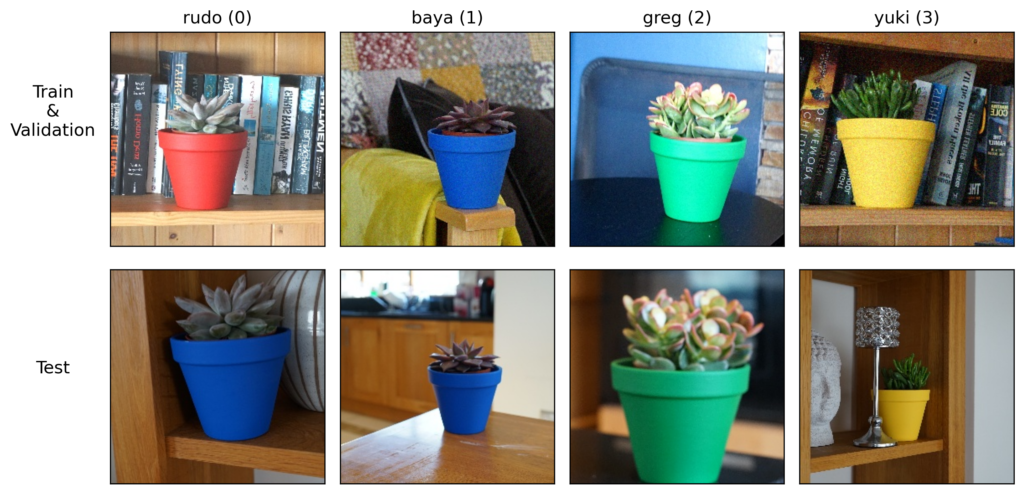

Like many millennials, I have satisfied my need to nurture with an unreasonably large pot plant collection. So much so that I have completely lost track of them. In an attempt to remember their names, yes they have names, I decided to build a machine-learning algorithm.

I started with the four plants you see below — Rudo, Baya, Greg and Yuki. During the process of building this pot plant detector, something went horribly wrong…

I dropped Rudo’s pot!

Thankfully, this was a blessing in disguise. After replacing the pot, I found the model’s accuracy dropped dramatically. Specifically, it went from 94% on validation data to 70% on test data. All of the mistakes were coming from Rudo being misclassified as Baya. Clearly, something had gone wrong during the model training process.

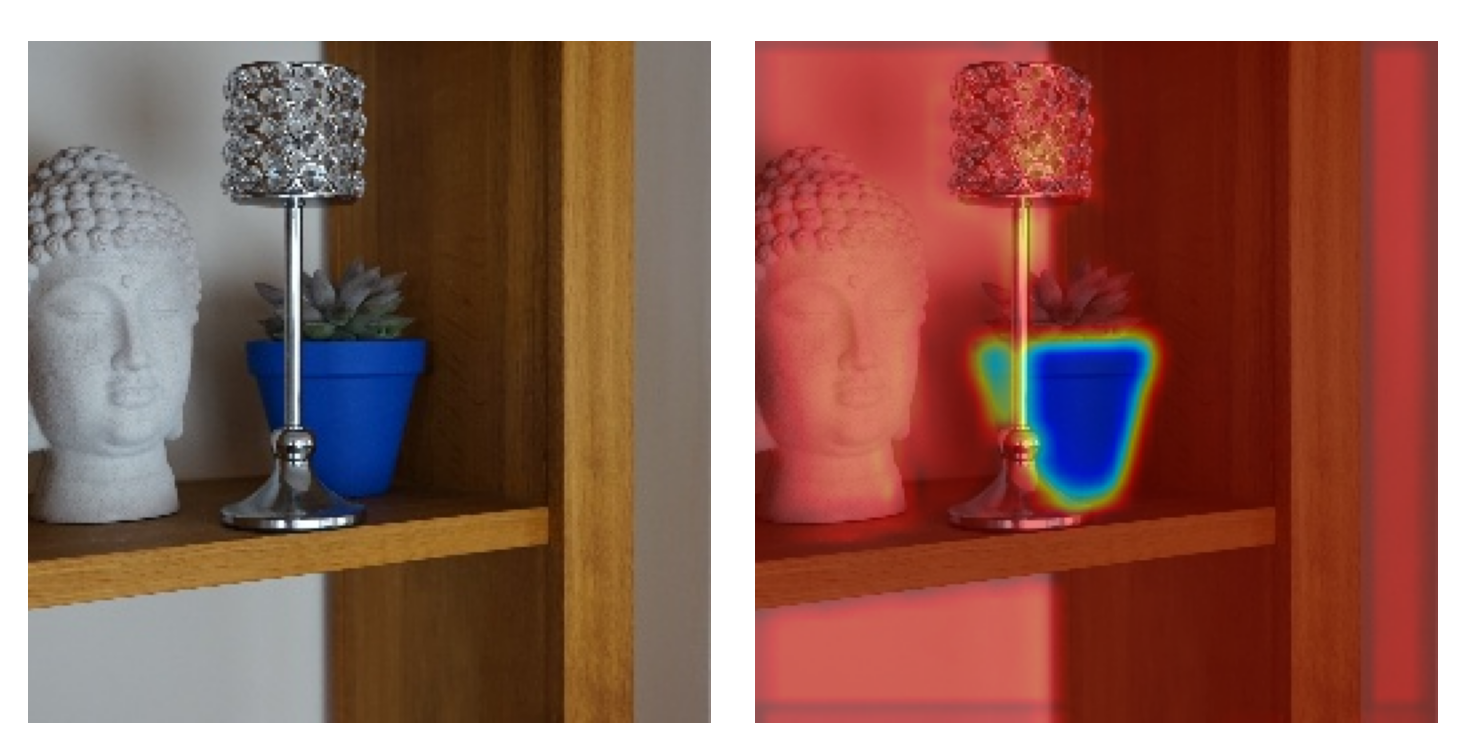

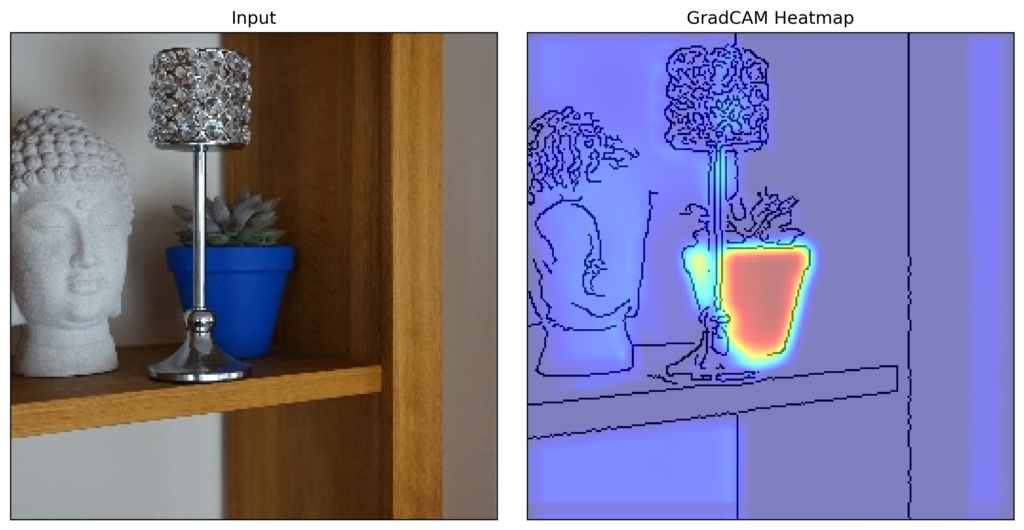

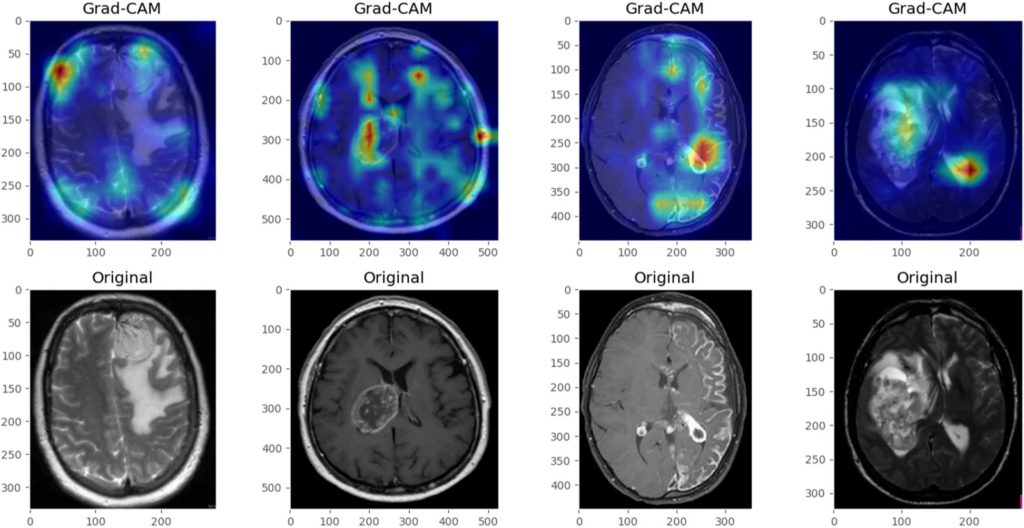

With the help of an XAI method called Grad-CAM [3], I discovered the problem. The model was using pixels from the pots to make classifications. Only Baya was in a blue pot in the training set. As Rudo was now in a blue pot, the model was misclassifying it. This is not what we want from a robust plant detector. It should be able to classify the plant regardless of what pot it is.

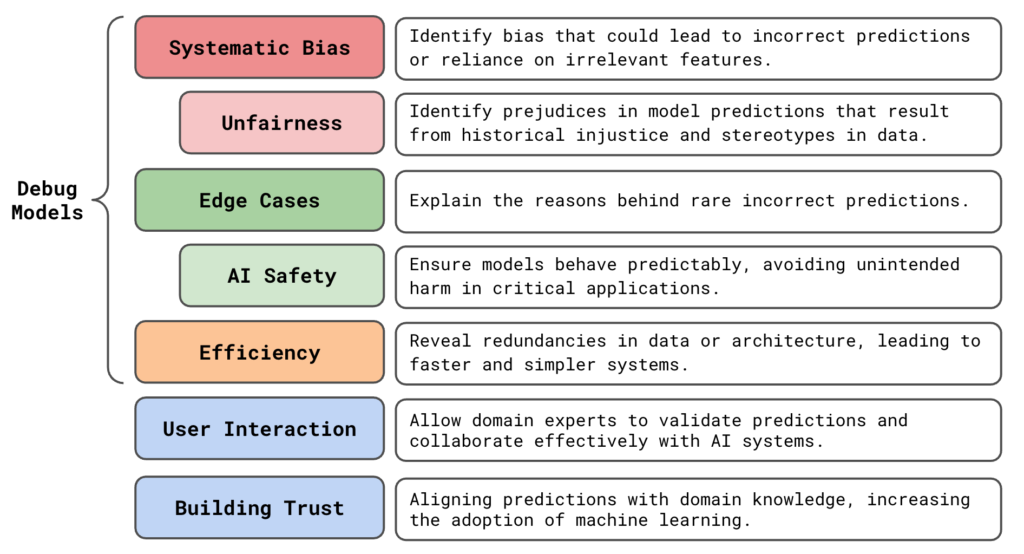

This is an example of the most important benefit of XAI for computer vision. That is identifying unknown or hidden bias in machine learning models. We will discuss this along with the other benefits summarized below. Really, this section aims to convince that XAI goes beyond simply understanding a model.

The insights of XAI methods can reveal issues with model robustness and accuracy. They allow us to be more certain that the models we developed will not cause any undesired harm. They can even help reduce the complexity of our AI systems. These are all fatal problems. Identifying them before deployment can save you a lot of time and money.

If you are interested in XAI, then you will find these courses useful:

Identifying systematic bias (mistakes) in machine learning models with XAI

Bias in machine learning is a dynamic term. Here, we refer to bias as any systematic errors that result in the model

- making incorrect predictions or

- making correct predictions but with misleading or irrelevant features

XAI can help explain the first problem but it is with the latter where it becomes particularly important. We refer to this as hidden bias as it can go undetected by evaluation metrics.

Okay, so for our pot plant detector, we did know there was bias. But this was only after we replaced Rudo’s pot. If we hadn’t, then the evaluation metrics would have provided similar results for all datasets. This is because the same bias would be present in the training, validation and test set. That is regardless of whether the test images were taken independently of the other data.

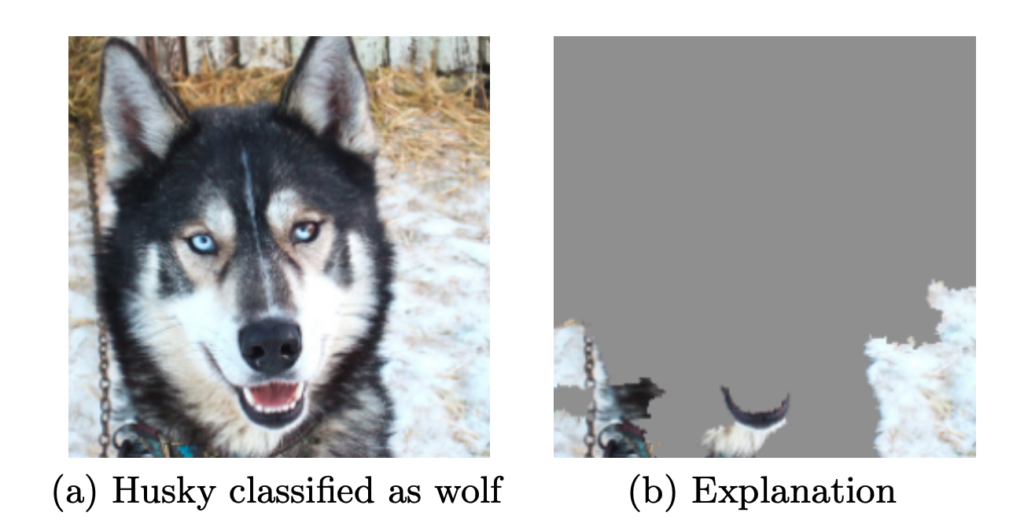

To a seasoned data scientist, the bias of brightly coloured pots may have been obvious. But, bias is sneaky and can be introduced in many ways. Another example comes from the paper that introduced LIME [2]. The researchers found their model was misclassifying some huskies as wolves. Looking at Figure 4, we see it was making those predictions using background pixels. If the image had snow, the animal was always classified as a wolf.

The problem is that machine learning models only care about associations. The colour of the pots was associated with the plant. Snow is associated with wolves. Models can latch on to this information to make accurate predictions. This leads to incorrect predictions when that association is absent. The worst-case scenario is when the association only becomes absent with new data in production. This is what we aim to avoid with XAI.

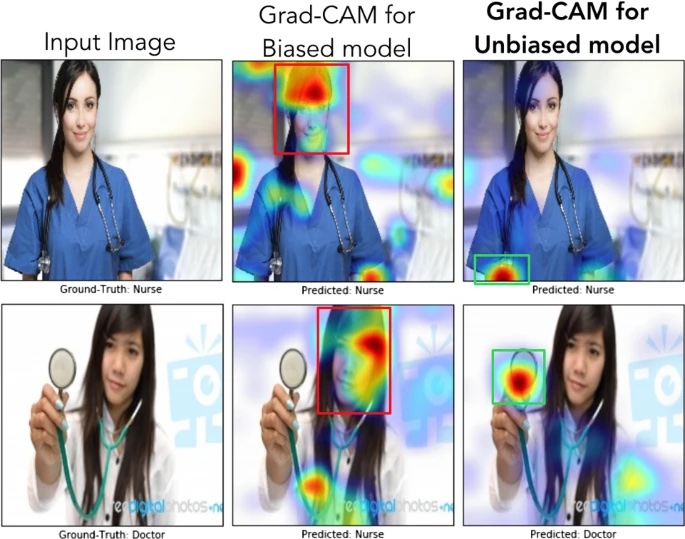

Identifying unfairness with XAI

Unfairness is a special case of systematic bias. This is any error that has led to a model that is prejudiced against one person or group. See Figure 5 for an example. The biased model is making classifications using the person’s face/hair. As a result, it will always predict an image of a woman as a nurse. In comparison, the unbiased model uses features that are intrinsic to the classification. These include short sleeves for the nurse and a white coat or stethoscope for the doctor.

These kinds of errors occur because historical injustice and stereotypes are reflected in our datasets. Like any association, a model can use these to make predictions. But just like an animal is not a wolf because it is in the snow, a person is not a doctor because they have short hair. And just like any systematic bias, we must identify and correct these types of mistakes. This will ensure that all users are treated fairly and that our AI systems do not perpetuate historical injustice.

Explaining edge cases with XAI

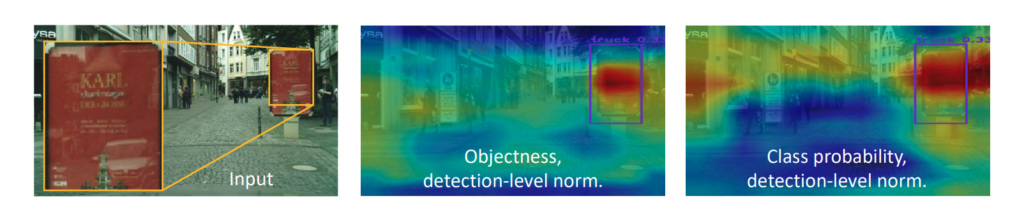

Not all incorrect predictions will be systematic. Some will be caused by rare or unusual instances that fall outside the model’s typical experience or training scope. We call these edge cases. An example is shown in Figure 6, where YOLO has falsely detected a truck in the image. The explanation method suggests this is due to “the white rectangular-shaped text on the red poster” [1].

Understanding what causes these edge cases will allow us to make changes to correct them. This could involve collecting more data for the specific scenarios reflected in the edge case. We should also remember that a model exists in a wider AI system. This makes a decision or performs an action based on the prediction from a model. This means some edge cases could be patched with post hoc fixes to how the predictions are used.

Improving AI safety

Correcting edge cases is strongly related to improving the safety of models. In general, ML safety refers to any measure taken to ensure that AI systems do not cause unintended harm to users, society, or the environment. It is about ensuring that our models behave predictably under expected conditions as well as unexpected conditions. This can only be done by understanding how models are making predictions.

AI safety is always a concern. This is especially true when the systems interact with our physical world. We go from worrying about social media algorithms serving content that harms mental health to systems that can cause physical harm. Think about how accidents involving automated vehicles are always in the news. It is the edge cases, missed by these systems, that lead to loss of human life.

Improving model efficiency with XAI

Until now, we have focused on how XAI can improve model performance and robustness. When deploying models we must take a broader view of performance. We need to consider aspects like the speed and complexity of the entire AI system. In short, we are concerned with the model’s resource use. This is referred to as model efficiency.

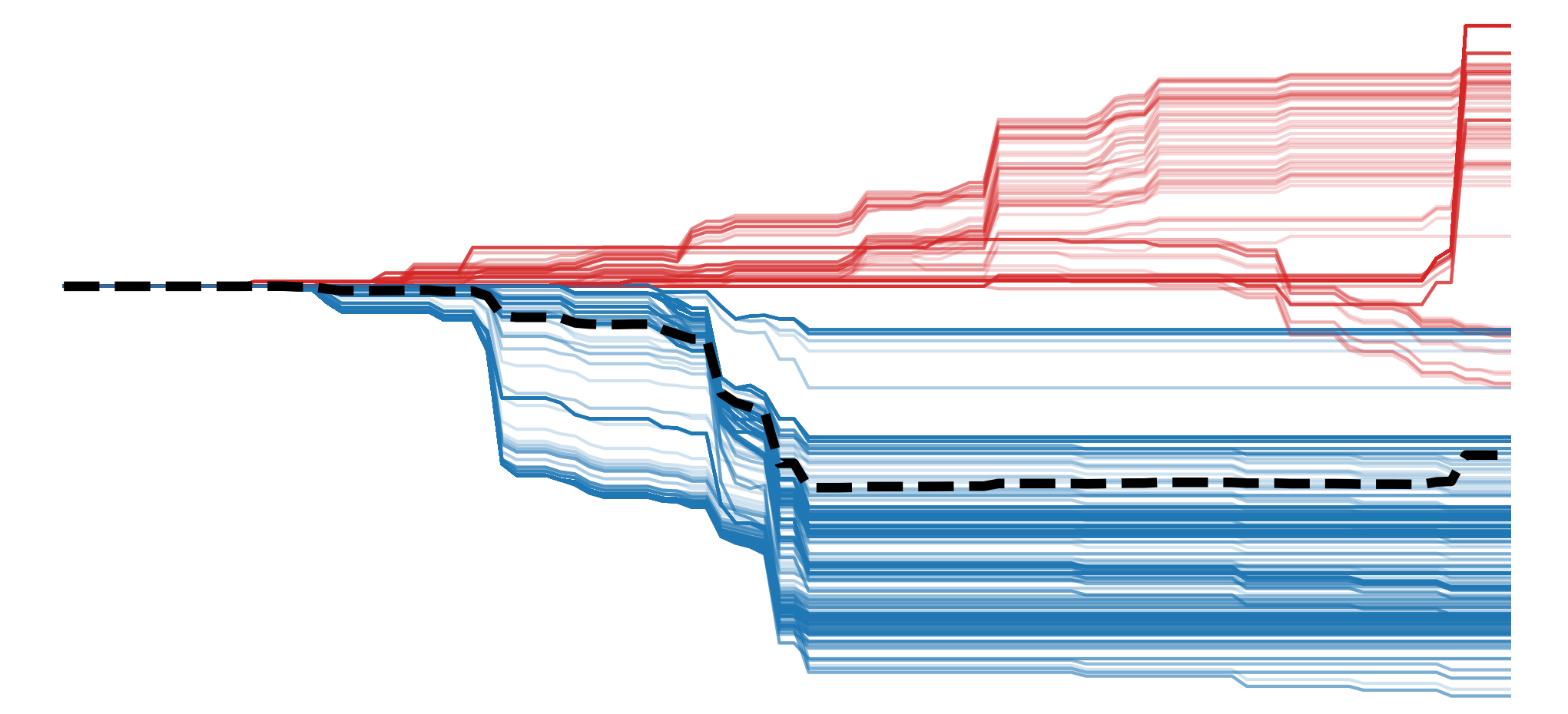

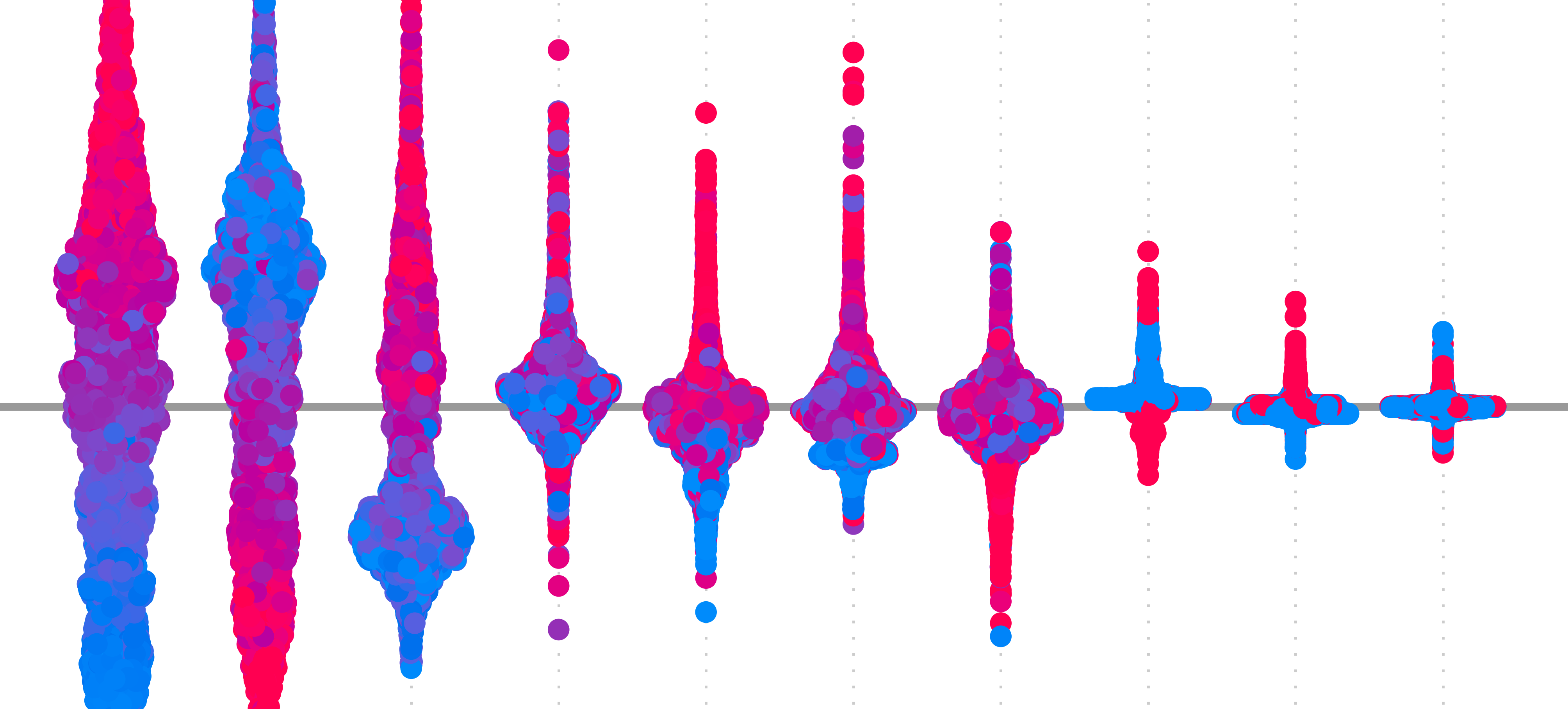

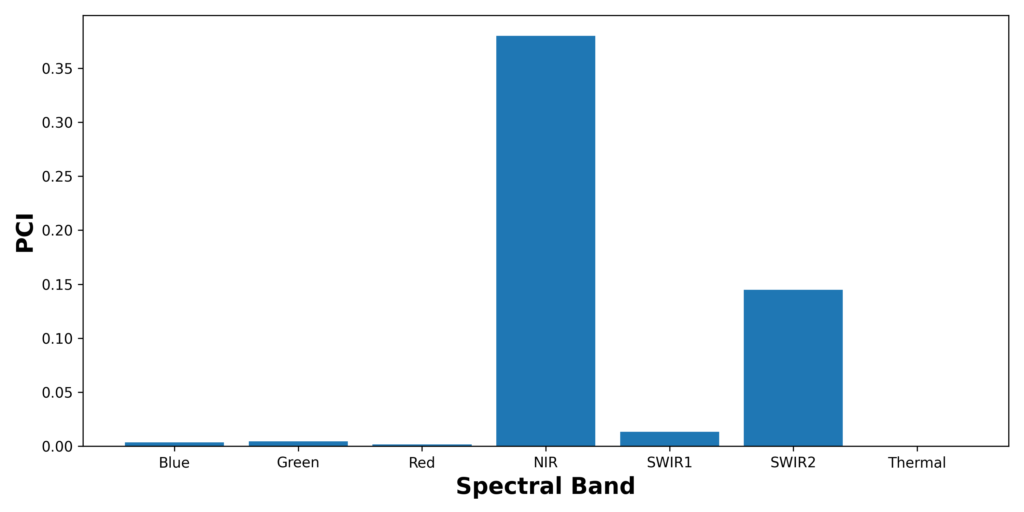

Using XAI, we can learn things that can help improve efficiency. For example, take the lesson where we used permutation channel importance to explain a coastal image segmentation model. Looking at Figure 7, we found that the model was only using 3 of the 12 spectral bands. We could potentially train a model using only these bands and significantly reduce our data requirements.

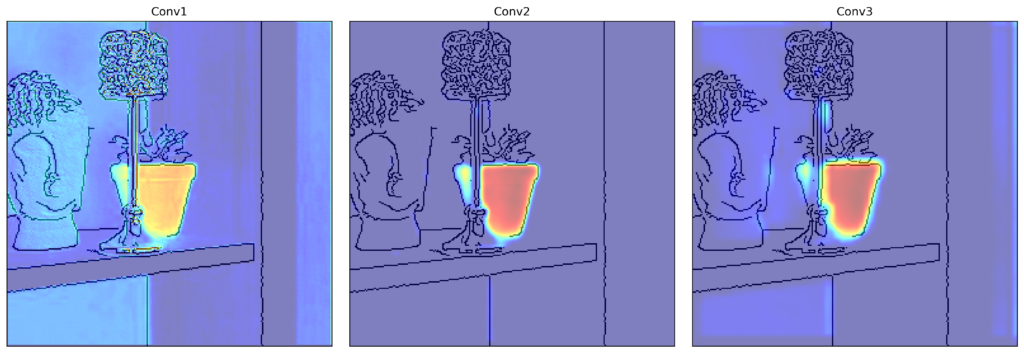

Another example comes from the lesson where we used Grad-CAM to explain a model used to classify images of house plants. The model was a CNN with three convolutional layers. Looking at Figure 7, we saw that each layer extracted similar features from the images. This suggests a simpler network, with fewer layers, would provide similar performance.

These kinds of insights can be used in future model builds to improve model efficiency. By only including data needed for accurate predictions, we can significantly cut down data processing time and storage space. Simpler input features and architecture will all provide faster predictions. The added benefit is that these simpler systems will be easier to interpret and explain.

The benefits we’ve mentioned up until now can all be summarised as debugging or improving a model. We want to identify any mistakes or inefficiencies. This is to improve the performance, reliability, fairness and safety of the resulting AI systems. The next benefits are different. They are more about the humans who interact with the models.

Enhancing user interaction with AI systems

For many applications, XAI is necessary to provide more actionable information. For example, a medical image model can make a classification (i.e. a diagnosis). Yet, on it’s own this has limited benefits. A patient would likely demand reasons for a diagnosis. It also does not provide any information on how severe the condition is or how it could best be treated.

Looking at Figure 8, you can see how the pixels that contributed to the positive diagnosis have been highlighted. Along with the classification, this provides more actionable information. That is the doctor can confirm the classification, explain where and how severe the tumor is and begin to understand the best course of action.

In general, XAI can help us make use of machine learning in industries where it is too risky to fully automate a task. Using the output of XAI, a professional can apply their domain knowledge to ensure a prediction is made in a logical way. The output can also tell us how to best go about addressing a prediction. Ultimately, through this type of interaction, we enable better human-machine collaboration.

Building trust in machine learning models

Even for applications that could be fully automated, XAI can help increase the adoption. Machine learning has the potential to replace processes in finance, law or even farming. Yet, you would not expect your average farmer or lawyer to have an understanding of neural networks. Their black-box nature could make it difficult for them to accept predictions. Even in more technical fields, there can be mistrust of deep learning methods.

“Many scientists in hydrology remote sensing, atmospheric remote sensing, and ocean remote sensing etc. even do not believe the prediction results from deep learning, since these communities are more inclined to believe models with a clear physical meaning.”— Prof. Dr. Lizhe Wang

XAI can be a bridge between computer science and other industries or scientific fields. It allows you to relate the trends captured by a model to the domain knowledge of professionals. We can go from explaining how correct the model is to why it is correct. If these reasons are consistent with their experience, a professional will be more likely to accept the model’s results.

Ultimately, when stakeholders understand the reasoning behind model predictions, it is easier to secure buy-in for AI solutions. This is particularly true in sensitive environments. In fact, as the world becomes more critical of AI and introduces more regulations, XAI will become necessary to meet legal and ethical requirements.

So hopefully you are convinced. XAI can help you identify and explain systematic biases and edge cases in your models. Its lesson can not only improve performance but also the efficiency, fairness and safety of your AI systems. Through building trust and improving interactions, it can even increase the adoption of machine learning.

Interpretability by design is usually a conscientious effort. Researchers will think of new architectures or adaptions to existing ones that allow you to understand how they work without additional methods. However, occasionally, we discover something about existing architectures that provide insights into their inner workings.

I hope you enjoyed this article! See the course page for more XAI courses. You can also find me on Bluesky | Threads | YouTube | Medium

Additional Resources

- The 6 Benefits of XAI (focused on tabular data)

- Get more out of XAI: 10 Tips

Datasets

Conor O’Sullivan, & Soumyabrata Dev. (2024). The Landsat Irish Coastal Segmentation (LICS) Dataset. (CC BY 4.0) https://doi.org/10.5281/zenodo.13742222

Conor O’Sullivan (2024). Pot Plant Dataset. (CC BY 4.0) https://www.kaggle.com/datasets/conorsully1/pot-plants

References

[1] Armin Kirchknopf, Djordje Slijepcevic, Ilkay Wunderlich, Michael Breiter, Johannes Traxler, and Matthias Zeppelzauer. Explaining yolo: leveraging grad-cam to explain object detections. arXiv preprint arXiv:2211.12108, 2022.

[2] Marco Tulio Ribeiro, Sameer Singh, and Carlos Guestrin. “why should i trust you?” explaining the predictions of any classifier. In Proceedings of the 22nd ACM SIGKDD international conference on knowledge discovery and data mining, pages 1135–1144, 2016.

[3] Ramprasaath R Selvaraju, Michael Cogswell, Abhishek Das, Ramakrishna Vedantam, Devi Parikh, and Dhruv Batra. Grad-cam: Visual explanations from deep networks via gradient-based localization. In Proceedings of the IEEE international conference on computer vision, pages 618–626, 2017.

[4] Mahesh T. R, Vinoth Kumar V, and Suresh Guluwadi. Enhancing brain tumor detection in mri images through explainable ai using grad-cam with resnet 50. BMC Medical Imaging, 24(1):107, 2024.

Get the paid version of the course. This will give you access to the course eBook, certificate and all the videos ad free.